Would you still go for a swim if you saw that sign on the beach?

Probably not.

Most people would agree that a swim in the sea is not worth dying for.

Now then, what drives people to cut corners in software engineering?

I think the factors that are weighed in to take these decisions are diametrically opposed to those of swimming in shark infested waters:

- The immediate gain looks substantial

- The consequences are far off and uncertain.

The Technical Debt metaphor mitigates the second factor but it might not be enough to deter compulsive corner cutters.

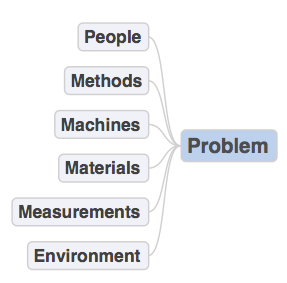

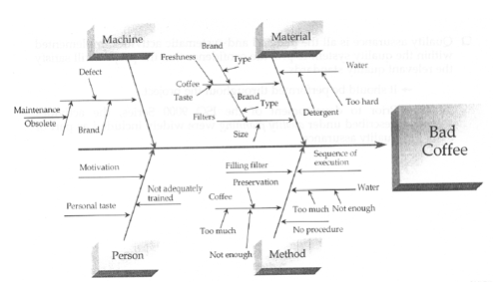

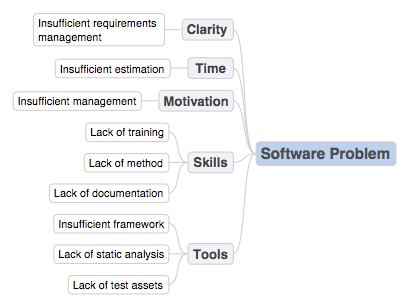

As I explained in a previous post, the technical debt involves several creditors because what drives people to cut corners on software quality usually has ar least already made two more victims along the way

.

.

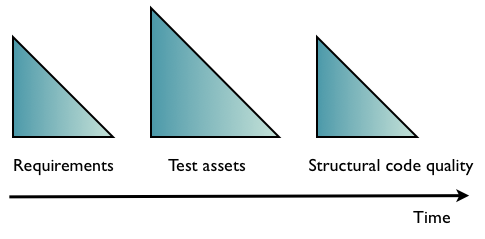

Let’s study what would be the first steps of paying back the technical debt of a legacy application that has become a big black box. We’ll assume that the strategy to fix the application has been agreed upon, that a static analysis tool has been procured, installed and configured and that all the violations are identified, classified and prioritised.

Of course this project is mission critical otherwise the management would not have bothered. So in order to start fixing the code of this big black box with the level of confidence required, you need to build a test harness. And to build the test harness, you need to discover what it is supposed to do, i.e. the requirements.

In other words you have to pay back the first creditors first.

1) Kaizen time

That does not mean you must restore dusty requirements documentation and manual test scripts like precious works of art.

Rather you want to take advantage of a lesson and an opportunity.

The lesson is staring right back at you: the cost of maintaining those assets was to high for the corner cutters in your organisation.

The opportunity comes from the time that has passed since those assets were created. Each year brings new ideas, methods and tools, a growing number of which are free.

It’s time to take a Lean look at those assets. For your work to be durable you don’t want them to stick out like big fat corners screaming «Cut me please !». Some of the forms these assets took in the past, such as large documents full of UML diagrams and manually written executable test scripts, were simply too high maintenance and should quietly go the way of the dodos.

Replace documents with structured tools that will store your requirements and use a simple framework like a keyword driven test automation framework to generate your tests. So shop around for the latest tools and methods and find the combination that works for your organisation.

2) Discover the requirements

For this you need the skills of an experienced tester and of a business analyst. He may not like it but the tester should have developed a knack for dealing with black boxes and reengineering requirements. So he should be able to figure out what the black box does. And with the input of the business analyst as to why the black box does what it does, together they should be able to come up with a set of requirements. The fact that they are two different individuals ensures that the requirements are well formulated and can be understood by third parties. Furthermore the business analyst helps prioritise the requirements. Store the requirements in the tool you previously selected.

3) Build the test harness

Next, the Most Important Tests (MITs) can be derived from the prioritised requirements. The tester can write those tests using the keyword driven framework. Even if those tests will never be automated, it is still a good idea to use one such framework for consistency purposes. But really, if at all possible, by all means use the framework to generate the executable test scripts. Remember they should only be the most important ones.

The last remaining obstacle between you and acceptance test driven refactoring bliss is test data. And this is often where test automation endeavours grind to an halt. Fortunately, the late frenzy about data has spawned a vibrant market for data handling tools and some of them have comprehensive data generating features. Talend is one of them. Personally I have recently used Databene Benerator and found it both reliable and easy to learn.

Mastering your test data makes a huge difference, it’s the key to unlock test automation. So it’s worth investing a little time in the tool you chose in order to achieve this objective.

Et voilà, now that you have paid the first two creditors you should be in a good position to pay the last one. Not only that, you have a built a solid foundation to keep them from coming back.

This article has also been posted on the www.ontechnicaldebt.com site: http://www.ontechnicaldebt.com/blog/technical-debt-paying-back-your-creditors/.

Follow

Follow Forward

Forward